Mark zuckerberg fact checking free speech meta – Mark Zuckerberg fact-checking free speech Meta is a complex issue. Zuckerberg’s evolving stance on content moderation and its impact on free expression on the Meta platform is at the heart of this discussion. We’ll delve into his public pronouncements, examine how Meta’s policies have been implemented, and analyze the potential repercussions for the free speech discourse online. This deep dive will consider contrasting views from other tech leaders, the pros and cons of Meta’s approach, and case studies of content moderation.

Ultimately, we aim to understand the public perception of Meta’s policies and foresee future trends in fact-checking and free speech.

This exploration will cover the intricacies of fact-checking and its intersection with free speech on social media. We’ll dissect the arguments surrounding platform interventions in content moderation, examining how fact-checking initiatives have influenced information dissemination. Different stakeholder perspectives, from users to policymakers, will be examined, providing a holistic view of this critical issue. Specific examples of content moderation will be analyzed, along with the reasoning behind moderation decisions, and the impact on social media discourse.

A comprehensive overview of Meta’s fact-checking methods, including the criteria for labeling content and the process of flagging, labeling, or removing content, will also be presented.

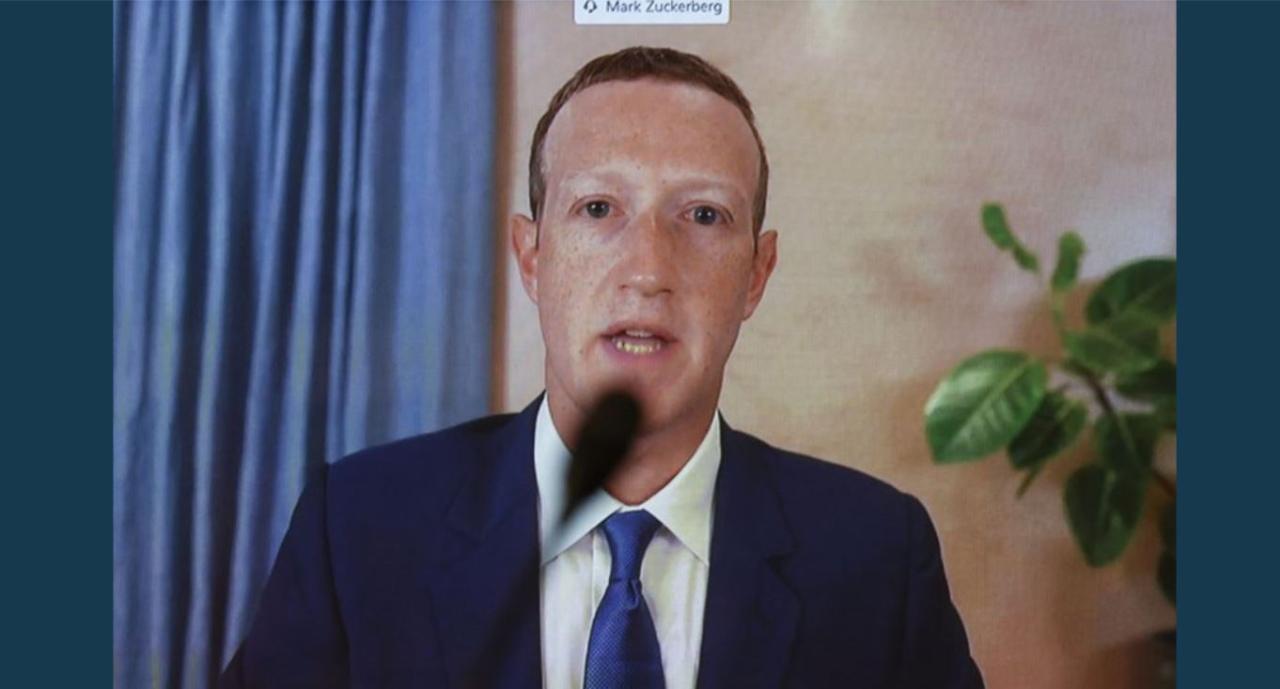

Mark Zuckerberg’s Stance on Fact-Checking

Mark Zuckerberg’s public pronouncements on fact-checking on Meta’s platforms have been a complex and evolving narrative. Initially, Meta’s approach to combating the spread of misinformation was met with criticism and a perceived lack of clear direction. Zuckerberg’s views on fact-checking have since been articulated more explicitly, and Meta has implemented policies in response.Zuckerberg’s approach to fact-checking reflects a tension between the desire to combat harmful misinformation and the need to protect free speech principles.

This has led to significant public discussion and debate about the balance between these two competing values. Meta’s policies, informed by Zuckerberg’s evolving views, have implications for the online discourse landscape, and raise questions about the role of social media platforms in shaping public opinion.

Mark Zuckerberg’s Public Statements on Fact-Checking

Zuckerberg’s public statements on fact-checking have evolved from a more neutral stance to one that acknowledges the need for greater oversight and moderation of content. Early statements focused on the platform’s role in connecting people, without explicitly addressing the issue of misinformation. However, as the prevalence of false and misleading information became more apparent, Zuckerberg has increasingly emphasized the need for fact-checking mechanisms.

Meta’s Fact-Checking Policies

Meta has implemented various policies based on its evolving stance on fact-checking. These policies aim to address misinformation and harmful content while preserving the integrity of the platform’s free speech principles. The policies often involve partnerships with third-party fact-checking organizations. These organizations assess the veracity of claims and provide ratings or labels to indicate their accuracy.

Impact on Free Speech Discourse

Meta’s fact-checking policies have had a significant impact on free speech discourse. Proponents argue that these policies help to combat the spread of misinformation and protect vulnerable users. Critics, however, argue that these policies can be used to censor legitimate viewpoints and stifle dissenting opinions. The potential for bias in fact-checking organizations is a significant concern.

Comparison of Tech CEOs’ Positions on Fact-Checking

| CEO | Stance on Fact-Checking | Examples of Policies |

|---|---|---|

| Mark Zuckerberg (Meta) | Evolving from a neutral stance to one that acknowledges the need for greater oversight and moderation. | Partnerships with third-party fact-checking organizations, content labeling, and removal policies. |

| Sundar Pichai (Google) | Acknowledges the need to address harmful content, but with a greater emphasis on user reporting and community guidelines. | Content removal policies based on user reports, and algorithmic filtering for harmful content. |

| Tim Cook (Apple) | Focuses on user privacy and safety while maintaining a neutral position on content moderation. | Content rating and review processes, but less directly involved in fact-checking. |

This table illustrates the differing approaches of major tech CEOs to the issue of fact-checking. It is important to note that these approaches often reflect the unique characteristics and responsibilities of each company.

Impact on Free Speech

The relationship between fact-checking and free speech on social media platforms is complex and contentious. While proponents argue that fact-checking can combat the spread of misinformation and harmful content, critics express concerns about potential censorship and the chilling effect on legitimate expression. This tension underscores the need for careful consideration of the role platforms play in regulating content.The debate surrounding platform interventions in content moderation is multifaceted.

Arguments for intervention often center on the platform’s responsibility to prevent the spread of harmful content, including misinformation, hate speech, and incitement to violence. Conversely, arguments against intervention frequently highlight the potential for abuse of power, the suppression of dissenting opinions, and the infringement on freedom of expression. This complex balancing act requires a nuanced approach that acknowledges both the potential benefits and risks of platform moderation.

Fact-Checking Initiatives and Information Spread

Fact-checking initiatives, while aiming to improve the quality of information shared on social media, have faced challenges in effectively combating the spread of misinformation. The speed at which false or misleading information can proliferate online often outpaces the ability of fact-checking organizations to debunk it. This dynamic underscores the difficulty of mitigating the impact of false narratives. Moreover, the effectiveness of fact-checking initiatives depends heavily on the engagement and trust of users.

Users who already distrust or dismiss the platform’s fact-checking efforts may not engage with the corrections, thus failing to counteract the initial misinformation.

Meta’s Policies and Stakeholder Perceptions, Mark zuckerberg fact checking free speech meta

Meta’s policies regarding fact-checking and content moderation have elicited varied responses from various stakeholders. Users often express concerns about perceived bias in fact-checking processes, while journalists argue that Meta’s policies sometimes impede their ability to report on critical issues. Policymakers have engaged in discussions regarding the appropriate balance between platform responsibility and individual freedom of expression. These different perspectives demonstrate the complexities inherent in regulating content on social media.

Mark Zuckerberg’s stance on fact-checking and free speech at Meta is definitely a hot topic. It’s interesting to consider how these issues might intersect with personal choices, like my recent switch to green tea. I traded coffee for green tea, a decision that, in retrospect, feels quite symbolic. Ultimately, the complexities of free speech and fact-checking at Meta, much like my own journey with tea, are multifaceted and require careful consideration.

It’s a complex subject that requires careful analysis, just like the decision of i traded coffee for green tea.

Pros and Cons of Meta’s Approach

| Aspect | Pros | Cons |

|---|---|---|

| User Experience | Potentially improved information quality through fact-checking; increased transparency regarding content moderation policies. | Potential for user frustration with fact-checking interventions; perceived bias in fact-checking processes; potential for chilling effect on legitimate expression. |

| Platform Responsibility | Combating the spread of misinformation and harmful content; upholding a certain standard of information quality on the platform. | Risk of censorship or suppression of legitimate opinions; potential for abuse of power in content moderation; challenges in balancing free speech and platform responsibility. |

| Public Trust | Building trust by addressing misinformation; enhancing public perception of platform responsibility. | Potential for eroding public trust if fact-checking efforts are perceived as biased or ineffective; challenges in maintaining transparency and accountability in content moderation. |

Meta’s Fact-Checking Mechanisms

Meta’s approach to fact-checking is a complex system, designed to address the spread of misinformation and disinformation on its platforms. While aiming to maintain a balance between protecting free expression and curbing the harmful effects of false or misleading content, the methods employed are constantly evolving and adapting to new challenges. This approach involves a multifaceted strategy, encompassing various techniques and criteria.Meta utilizes a combination of automated systems and human review to identify and address potentially problematic content.

This multifaceted approach is intended to ensure accuracy and accountability, while also considering the potential impact of their actions on the wider conversation.

Fact-Checking Methods Employed by Meta

Meta employs a range of methods to verify the accuracy of content. These include automated tools for initial screening and human reviewers for more in-depth analysis. The process prioritizes speed and efficiency while maintaining accuracy.

- Automated Content Analysis: Sophisticated algorithms analyze content for s, patterns, and links to known sources of misinformation. This initial screening process flags potential inaccuracies for further review.

- Human Review: Human fact-checkers assess the flagged content, cross-referencing it with multiple sources, including news articles, academic journals, and government reports. The human element ensures a nuanced understanding of context and intent.

- Third-Party Fact-Checkers: Meta collaborates with independent fact-checking organizations to verify information. This partnership leverages the expertise and resources of established organizations, bolstering the credibility of their fact-checking efforts.

Criteria for Determining Falsehood or Misleading Content

The criteria for determining if content is false or misleading are multifaceted. These criteria are designed to be comprehensive and adaptable, addressing the complexity of misinformation.

Mark Zuckerberg’s stance on fact-checking and free speech at Meta is definitely a hot topic. It’s fascinating how these debates connect to larger societal issues. While we’re discussing this, it’s also worth considering the latest trends in footwear design, like the extravagant new shoe styles at springs new shoes larger than life. Ultimately, these seemingly disparate subjects raise questions about balance and responsibility in a digital world, and how platforms like Meta handle content moderation.

- Verification against Multiple Sources: The accuracy of a claim is evaluated by examining it against various credible sources. If multiple reputable sources contradict the claim, it is flagged as potentially false.

- Contextual Analysis: The content’s context is crucial in determining if it’s misleading. Claims that are true in one context might be false or misleading in another.

- Intent of the Poster: In some cases, the intent behind a post is considered. A post that is objectively false but presented as satire or humor might not be flagged.

Process for Content Flagging, Labeling, or Removal

Meta’s process for handling flagged content involves a series of steps, from initial identification to final action.

- Initial Flagging: Automated systems flag content that matches predefined criteria or alerts generated by third-party fact-checkers.

- Human Review: Trained fact-checkers review the flagged content to determine if it’s false or misleading. This review process involves verifying information against multiple sources and assessing context.

- Content Modification: Depending on the severity of the inaccuracy, content may be labeled, restricted, or removed. Labels, for example, can warn users about the potential inaccuracy of the information shared.

Comparison with Other Platforms

Meta’s fact-checking methods differ from those of other platforms. Each platform employs various strategies, reflecting different resources and priorities.

Mark Zuckerberg’s stance on fact-checking and free speech at Meta is definitely a hot topic. It’s fascinating to see how these issues intersect with the broader conversation around online content moderation. Meanwhile, the buzz around Milan Fashion Week is equally captivating, with everyone wondering who will steal the show. For a deeper dive into the fashion world’s hottest trends, check out this article on ciao bella whos coming out on top this milan fashion week.

Ultimately, Zuckerberg’s approach to content moderation is likely to continue being a central point of debate as the digital landscape evolves.

| Platform | Fact-Checking Method | Strengths | Weaknesses |

|---|---|---|---|

| Meta | Combination of automated and human review, third-party collaboration | Comprehensive approach, diverse verification methods | Potential for bias in algorithms, varying effectiveness across different content types |

| Other Platforms | Varies significantly, from limited labeling to complete removal | May focus on specific types of misinformation or regions | May lack the same level of resources or expertise as Meta |

Categories of Content Fact-Checked by Meta

Meta’s fact-checking efforts extend to various categories of content. The categories are designed to address a broad spectrum of misinformation.

- Political Claims: Statements related to political figures, policies, and events are subject to fact-checking.

- Health Claims: Information related to health, medical conditions, and treatments is scrutinized for accuracy.

- Social Issues: Statements on social issues, such as racial tensions, social justice movements, or human rights are assessed for veracity.

- Economic Information: Statements related to economic trends, market analysis, or financial information are examined.

Public Perception of Meta’s Policies

Meta’s stance on fact-checking and free speech has sparked considerable public debate, eliciting a wide range of reactions from diverse groups. The company’s attempts to regulate information have been met with both praise and criticism, significantly influencing public discourse on the role of social media platforms in shaping public opinion and disseminating news. Understanding these varying perspectives is crucial to comprehending the evolving landscape of online content moderation.Public reactions to Meta’s policies on fact-checking and free speech have been complex and multifaceted, with significant variations in opinions across different demographics and ideologies.

These differing viewpoints stem from diverse interpretations of the balance between maintaining a safe and informed online environment and protecting freedom of expression.

Criticisms of Meta’s Fact-Checking

Concerns about Meta’s fact-checking initiatives often center on accusations of bias and censorship. Critics argue that Meta’s algorithms and human moderators may not be objective and could disproportionately target content from certain viewpoints. Furthermore, some believe that fact-checking mechanisms can stifle legitimate dissenting opinions or viewpoints, potentially leading to a homogenization of information. There are also concerns about the potential for abuse of power, where the platform’s fact-checking process could be used to silence political opponents or marginalize certain groups.

Support for Meta’s Fact-Checking

Conversely, there is support for Meta’s fact-checking efforts, particularly from those concerned about the spread of misinformation and its potential to harm individuals and society. These individuals highlight the crucial role of platforms in combating the proliferation of false information, which can have serious consequences in areas like public health, elections, and financial markets. The argument is that responsible fact-checking can help maintain a more informed public discourse and protect vulnerable populations from malicious content.

Diverse Perspectives on Meta’s Role in Regulating Information

| Perspective | Arguments | Examples |

|---|---|---|

| Pro-Regulation | Platforms should actively moderate harmful content, including misinformation, to protect users and maintain a safe online environment. | Concerns about the spread of misinformation during elections, the impact of false health claims, or the potential for incitement of violence. |

| Pro-Free Speech | Platforms should not censor or regulate content, respecting the right to free expression even for controversial or unpopular views. | Concerns about potential abuse of power by platforms, the risk of stifling dissent, or the potential for biased fact-checking. |

| Neutral | A nuanced approach is needed, balancing the need for safety with the right to free expression. | Calls for transparency in fact-checking processes, greater accountability for platforms, and the development of more robust mechanisms for appeals and reviews. |

User Feedback and Commentary

Numerous examples of user feedback illustrate the diverse opinions on Meta’s policies. Comments on social media posts and forums often express strong support or opposition to the company’s initiatives. For example, some users have praised Meta for its efforts to combat misinformation, while others have criticized the platform for suppressing dissenting opinions. These diverse reactions demonstrate the significant impact that Meta’s policies have on public discourse.

Case Studies of Content Moderation

Meta’s approach to content moderation is a complex balancing act between upholding community standards and protecting free speech. The platform’s policies, as we’ve seen, are not without their critics, and the impact of specific moderation decisions on online discourse is a subject of ongoing debate. Examining case studies offers a window into the practical application of these policies and their effects on the broader social media landscape.

Examples of Fact-Checked Content

Meta’s fact-checking program targets content that demonstrably misrepresents factual information. This includes claims about elections, health, and scientific phenomena. The platform partners with independent fact-checking organizations to assess the accuracy of these assertions.

- A post claiming a specific medical treatment cures a disease is flagged by Meta’s system and reviewed by fact-checkers. After verification, the post is labeled with a disclaimer, informing users that the claim lacks scientific backing. This action seeks to prevent the spread of misinformation that could potentially harm users.

- A post disseminating false information about an upcoming election, designed to sway public opinion, is flagged and investigated. The fact-checking team, after consulting reputable sources, determines the post contains false information. The post is marked as disputed and accompanied by a link to verified information.

Examples of Removed Content

Meta also removes content that violates its community standards, including hate speech, incitement to violence, and harassment. The platform uses a multi-layered approach, combining automated systems with human review to assess the severity and nature of the violations.

- A post containing hate speech targeting a specific religious group is flagged and reviewed. Meta’s automated systems initially identify the post as potentially harmful, and human reviewers confirm its violation of community standards. The post is removed, and the account may face further restrictions depending on the severity and frequency of the violations.

- A post encouraging violence against a specific political figure is removed by Meta’s automated system. Human review validates the automatic identification and the post is removed to mitigate the risk of real-world harm. The account may face suspension or termination depending on the severity and frequency of the violations.

Impact on Social Media Discussions

The removal or fact-checking of content can significantly impact the flow and nature of discussions on social media. It can lead to increased scrutiny of claims, a greater reliance on verifiable information, and potential shifts in public perception.

- When a post claiming a medical cure is labeled as false, the discussion around the purported cure often shifts towards more credible sources for information. This can lead to a reduction in the spread of misinformation and encourage users to consult with healthcare professionals.

- In cases of election misinformation, the labeling or removal of such posts may limit the reach of false narratives. This could influence the outcome of public opinion and encourage users to rely on official sources for accurate information.

Comparison with Other Platforms

The approaches and outcomes of content moderation vary across social media platforms. Some platforms take a more restrictive stance, while others prioritize user freedom of expression. The effectiveness of different strategies is a subject of ongoing debate.

Categorized Case Studies of Misinformation

| Type of Misinformation | Examples | Reasoning | Impact on Social Media |

|---|---|---|---|

| Health Claims | Claims about cures, treatments, or prevention methods | Potential harm to public health | Shift towards seeking verified information from healthcare professionals |

| Political Misinformation | False information about elections or political figures | Potential impact on democratic processes | Reduced spread of false narratives, greater reliance on official sources |

| Hate Speech | Content targeting specific groups based on race, religion, or other factors | Violation of community standards, potential for harassment | Reduced instances of harassment and discrimination |

Future Trends in Fact-Checking and Free Speech

The intersection of fact-checking and free speech is a complex and evolving landscape. As technology advances, platforms face increasing pressure to balance the desire for open discourse with the need to combat the spread of misinformation. This necessitates a nuanced understanding of the evolving challenges and opportunities. The future will likely see a greater emphasis on proactive measures, rather than reactive ones, in identifying and addressing false information.

Potential Developments in Fact-Checking Technology

Fact-checking is rapidly evolving beyond human review. Machine learning algorithms are increasingly capable of identifying patterns in language and content, flagging potential inaccuracies with greater speed and scale than ever before. Natural language processing (NLP) is being integrated into fact-checking tools, enabling more sophisticated analysis of text and context. This automation is poised to significantly increase the efficiency of fact-checking, potentially enabling near real-time identification of false claims.

Furthermore, the development of AI-powered fact-checking tools will likely lead to a more personalized experience, tailored to individual users and their information consumption habits. This could involve dynamic fact-checking pop-ups or contextual information presented alongside potentially misleading content.

Emerging Challenges in Balancing Free Speech and Accuracy

The challenge lies in defining and applying standards for fact-checking. A key challenge will be establishing clear guidelines for what constitutes misinformation, while ensuring that legitimate opinions and dissenting views are not stifled. Determining the appropriate level of intervention is crucial. Overzealous fact-checking could lead to censorship and stifle legitimate debate, whereas insufficient intervention could allow harmful falsehoods to proliferate unchecked.

This necessitates a constant dialogue between platform moderators, researchers, and policymakers to establish universally agreed-upon standards. Examples of such dialogues are seen in ongoing discussions about the regulation of political advertising and the use of social media during election cycles.

Impact of Technological Advancements on Content Moderation

Technological advancements will inevitably reshape content moderation practices. The rise of AI-powered tools for identifying harmful content, such as hate speech and harassment, will become more prevalent. However, the ethical implications of AI-driven moderation need careful consideration. Algorithms could inadvertently perpetuate biases or misinterpret nuanced contexts. Human oversight remains critical in ensuring that these systems are used responsibly and equitably.

Moreover, the ability to analyze vast amounts of data will allow for the detection of emerging trends and patterns in harmful content, enabling preemptive measures and improved response strategies.

Innovative Approaches to Content Moderation

Innovative approaches to content moderation are emerging. Platforms are experimenting with user-driven flagging systems, community reporting mechanisms, and AI-powered tools for content categorization. These initiatives strive to empower users in identifying potentially harmful content while simultaneously mitigating the potential for manipulation and abuse of these systems. Platforms are also exploring the use of gamification to encourage responsible content sharing and engagement.

For example, users could earn points or badges for sharing verified information or reporting misleading content. Furthermore, the use of interactive fact-checking quizzes and educational materials could help foster media literacy and critical thinking skills among users.

Forecasting Future Trends in Platform Moderation

| Trend Category | Potential Development | Impact | Example ||—|—|—|—|| AI-Driven Fact-Checking | Advanced NLP and machine learning algorithms will be widely integrated into platforms. | Increased speed and scale of fact-checking; potentially near-real-time identification of misinformation. | Meta’s use of AI for identifying hate speech. || Personalized Fact-Checking | Fact-checking will become more tailored to individual users’ information consumption habits.

| Enhanced user experience; potentially reduced exposure to misinformation. | Recommendation engines flagging potentially misleading content based on user history. || Community-Based Moderation | Platforms will rely more on user reporting and feedback mechanisms for content moderation. | Increased user engagement; more nuanced identification of harmful content. | Reddit’s use of user-moderated communities.

|| Proactive Content Filtering | Platforms will proactively identify and address emerging trends in misinformation and harmful content. | Reduced spread of misinformation; early detection of emerging threats. | Identifying and mitigating the spread of misinformation related to specific current events. || Transparency and Accountability | Platforms will increase transparency in their content moderation policies and procedures. | Improved user trust and understanding of moderation processes.

| Openly publishing moderation guidelines and algorithms. |

Ending Remarks: Mark Zuckerberg Fact Checking Free Speech Meta

In conclusion, Mark Zuckerberg’s stance on fact-checking within the Meta ecosystem has profound implications for free speech online. The interplay between these concepts raises complex questions about platform responsibility and the future of information dissemination. Public perception plays a significant role, influencing discourse and shaping policy. The ongoing debate regarding Meta’s role in regulating information is multifaceted, and the future trends in fact-checking and content moderation will be heavily influenced by the outcome of this dialogue.

Understanding the nuances of this issue is crucial for navigating the evolving landscape of social media.